Auditory Space, LLC offers a wide range of consulting services for perception scientists and technology developers:

- Software development and customization

- Systems integration, setup, and calibration

- Support and customization of software (e.g., APE and ASPACE)

- Experimental design and implementation

- Psychophysical evaluation of algorithms and devices,

- Discrimination and scaling

- Multi-level adaptive tracking

- Perceptual-weight assessment

- Time-series and adaptation studies

We offer competitive rates and standard discounts for non-profit/academic/federally funded research. Discounts up to 100% are negotiable in support of early-stage research and of any project where our staff act as co-investigators. Please contact info@auditory.space to inquire about standard rates or discuss a flat-rate quote for your project.

Variously completed in consulting, collaborative, and employment relationships, a sampling of past projects illustrates the breadth of consulting services we can provide.

Center for Auditory Neuroimaging, Seattle WA (2010-2013). Established at the University of Washington with the mission to provide critical support to neuroscientists using sound to study the human brain, the Center was founded by Dr. Stecker to focus on three specific aims associated with the unique challenges of working with sound in the acoustically and magnetically hostile environment of magnetic resonance imaging:

- Develop procedures for recording and measuring sound inside the UW 3T MRI scanner.

- Develop physical, acoustical, and computational tools to evaluate and calibrate audio-delivery systems for use in the MRI scanner.

- Evaluate brain-imaging protocols for reduction of acoustic noise and auditory interference.

The tools and procedures resulting from these activities remain in use at UW. Many of the results were communicated to other MRI centers around the world, where they informed the selection and implementation of audio-delivery systems.

Vanderbilt Bill Wilkerson Center Anechoic Chamber Laboratory, Nashville TN (2014-2016). This unique research facility, originally established in 2005, provides 360 degrees of sound-field presentation to human listeners, with virtually zero contamination by echoes and reverberation. For the 2014 upgrade, we conducted product research on high-channel audio routing and amplification systems, recommended and designed a 64(out) x 16 (in)-channel Dante network (upgradeable to 512×512) for real-time control of all loudspeaker channels from a single research computer. We installed the network and developed a custom software framework (based on MATLAB and integrated with APE) for 64-channel signal delivery with easy programmable control of routing for experiments that utilize fewer channels or require on-line control of loudspeaker destinations.

Virtual Room Simulation in the VBWC Anechoic Chamber Laboratory, Nashville TN (2015-2016). This software solution integrates with the 64-channel audio system implemented in 2014, adding a robust and extensible framework for object-based audio in MATLAB. Object waveforms are processed via virtual room impulse responses (RIRs) that reproduce geometric, temporal, and material properties for rendering via the ACL’s 64-loudspeaker array. The framework provides tools for constructing virtual rooms, specifying room parameters, calculating RIRs, and rendering object-based audio.

National Center for Rehabilitative Auditory Research, Portland OR (2017). In 2017, we adapted the room-simulation software to the NCRAR anechoic chamber lab in Portland OR. This 24-channel system implements identical capabilities at slightly lower spatial resolution than the original. It is used to assess spatial hearing capabilities across the lifespan.

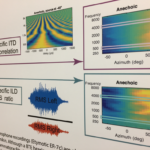

MATLAB toolkit for time-frequency analysis of spatial cues in binaural recordings. (2015-2016). We developed custom MATLAB code that uses Dante input channels to capture sound from probe-tube microphones inserted into the ears of a human listener or recording manikin. These binaural recordings can be used conventionally to prepare binaural stimuli for later presentation, or to capture a head-related transfer function (HRTF) for use in conventional “3D” virtual-audio applications. This project goes several steps beyond that capability by integrating binaural recording with software models of auditory processing in the human ear and brainstem. The models are used to extract and map binaural features of sound across time and frequency. Such maps provide a first step toward automated binaural scene analysis for auditory augmented reality (AR).

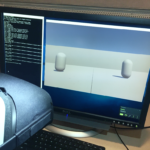

Incorporating VR displays in the Vanderbilt ACL, Nashville TN (2016-2018). This unique application of A-SPACE allows flexible visuo-spatial interactions to accompany auditory stimuli delivered from the anechoic chamber loudspeaker array. We researched, recommended, and installed a hardware solution including graphics-focused PC and HTC Vive display with VR sensors integrated into the loudspeaker array itself. A-SPACE is controlled by TCP/IP messages sent between the audio and VR computers via a dedicated local area network. The system allows natural combinations of visual and auditory spatial cues in both anechoic and simulated reverberant settings.